- Link2Paper

- [Author: Y Kong et al.]

- [Keywords: TCP, Congestion Control, Reinforcement Learning]

Motivation

Previous congestion control methods (NewReno, Vegas[1], Cubic[2], Compound[3]) are:

- Mechanism-driven instead of objective-driven

- Pre-defined operations in response to specific feedback signals

- Do not learn and adapt from experience

Related Work

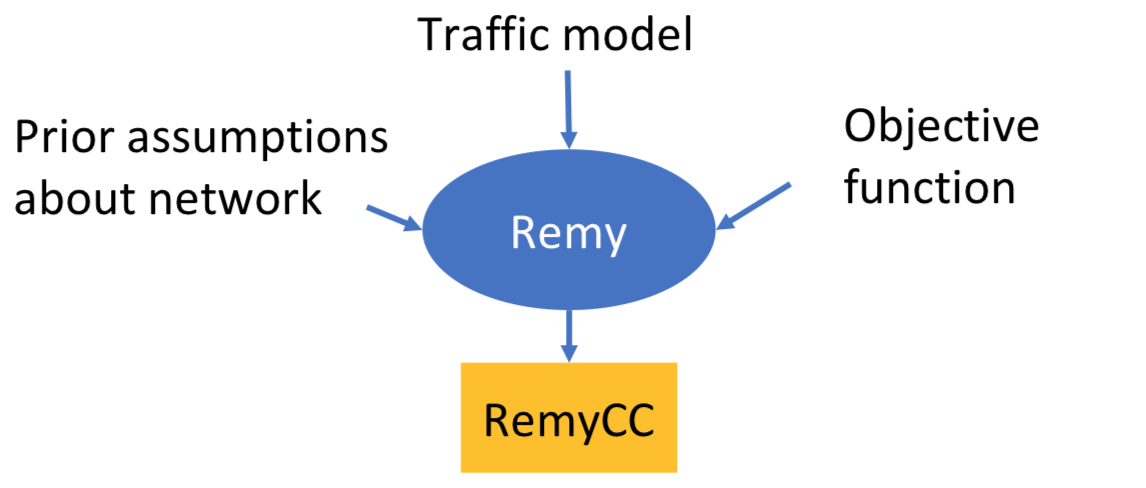

RemyCC [4]

- Delay-throughput tradeoff as objective function

- Offline training to generate lookup tables

- Inflexible for the network & traffic model changes

Q-TCP [5]

- Based on Q-learning

- Designed with mostly a single flow in mind

- Sufficient buffering available at the bottleneck

Contribution

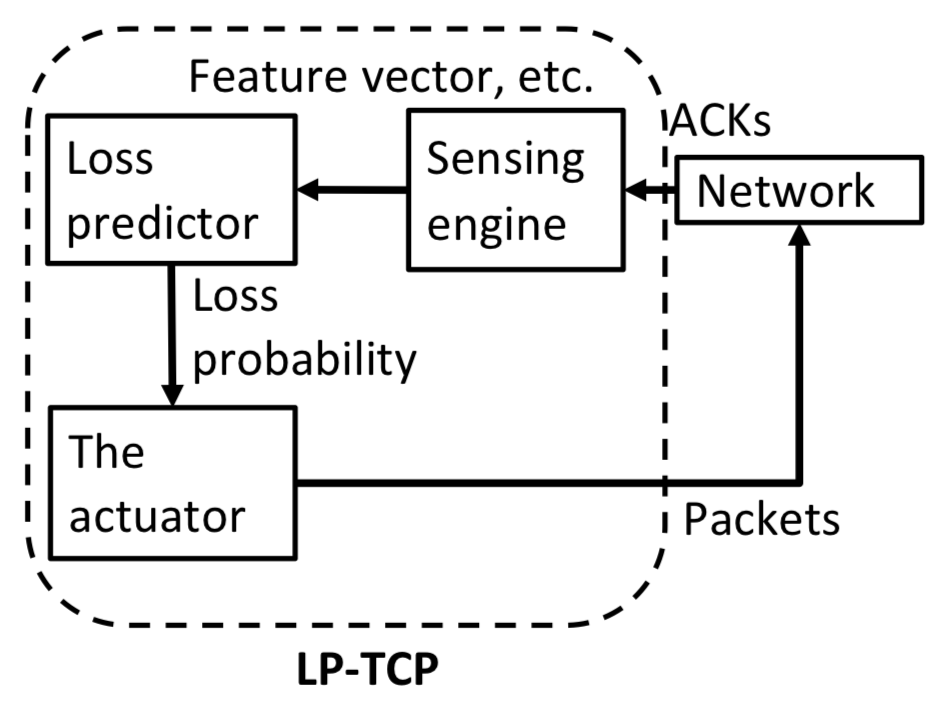

Loss prediction based TCP (LP-TCP)

Teach TCP to optimize its cwnd to minimize packet loss events during congestion avoidance.

- When a new ACK is received, cwnd += 1/cwnd

- Before sending a packet

- Sensing engine updates the feature vector

- cwnd

- ewma of ACK intervals

- ewma of sending intervals

- minimum of ACK intervals

- minimum of sending intervals

- minimum of RTT

- time series (TS) of ack intervals

- TS of sending intervals

- TS of RTT ratios

- …

- Loss predictor outputs loss probability p

- Training data gathered through NewReno simulations on NS2

- Train a random forest classifier offline

- Re-train LP upon network changes

- If p < threshold, the actuator sends the packet

- Otherwise, the packet is not sent, and cwnd -= 1

- Sensing engine updates the feature vector

- Set threshold to max

- M_e = log(throughput) - 0.1 log(delay)

- where delay = RTT - RTT_{min}

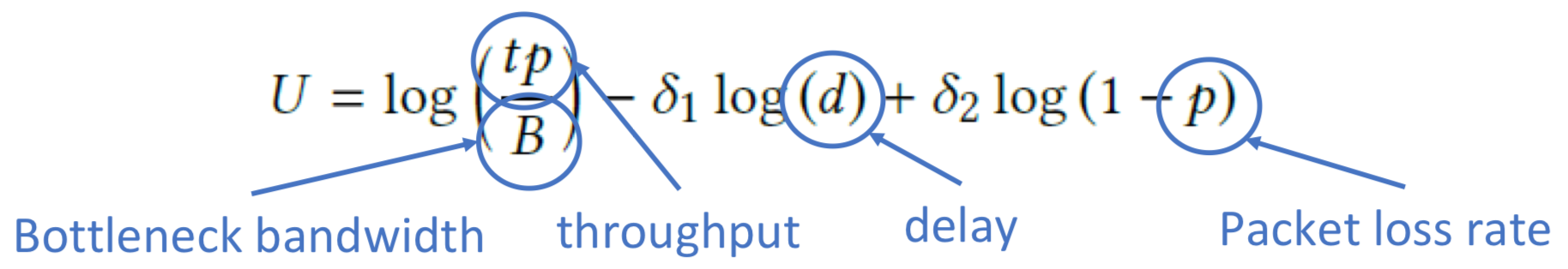

Reinforcement learning based TCP (RL-TCP)

Learn to adjust cwnd to increase an utility function.

- State s_n

- ewma of the ACK inter-arrival time

- ewma of packet inter-sending time

- RTT ratio

- slow start threshold

- current cwnd size

- Action a_n

- cwnd += a_n, where a_n = -1, 0, +1, +3

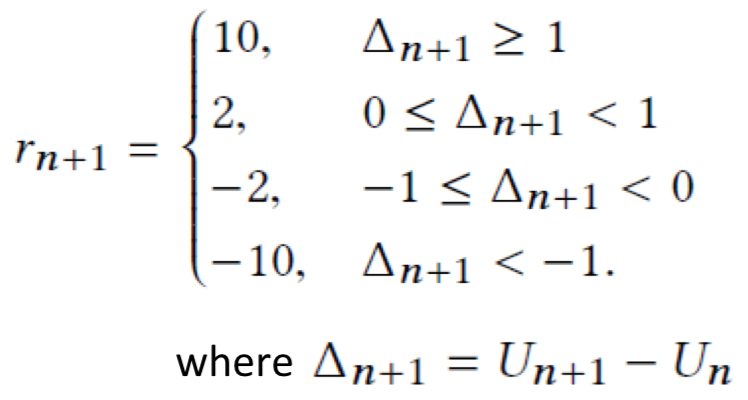

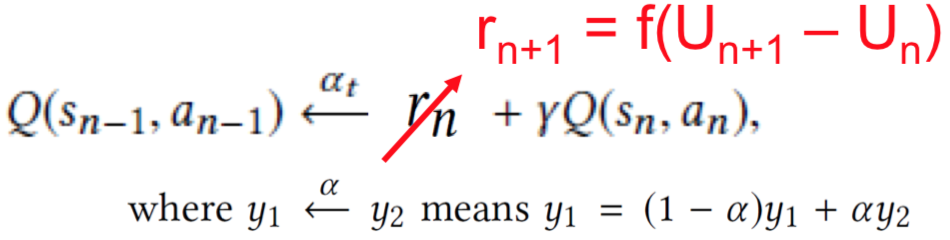

- Reward R_{n+1}

- Q-Learning

- Update every RTT

- SARSA

- Temporal credit assignment of reward

- Action Selection

- ɛ-greedy exploration & exploitation

Evaluation

LP-TCP

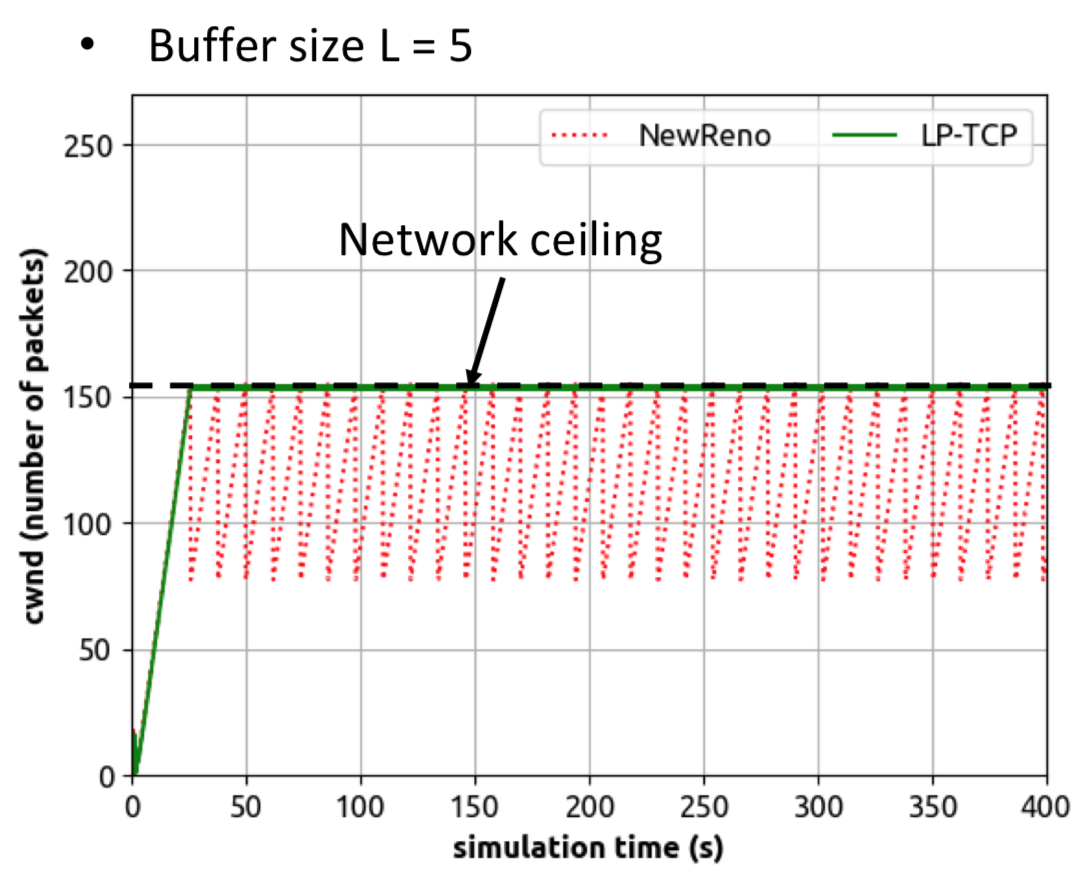

LP-TCP predicts all packet losses (during congestion avoidance) & keeps the cwnd at the network ceiling.

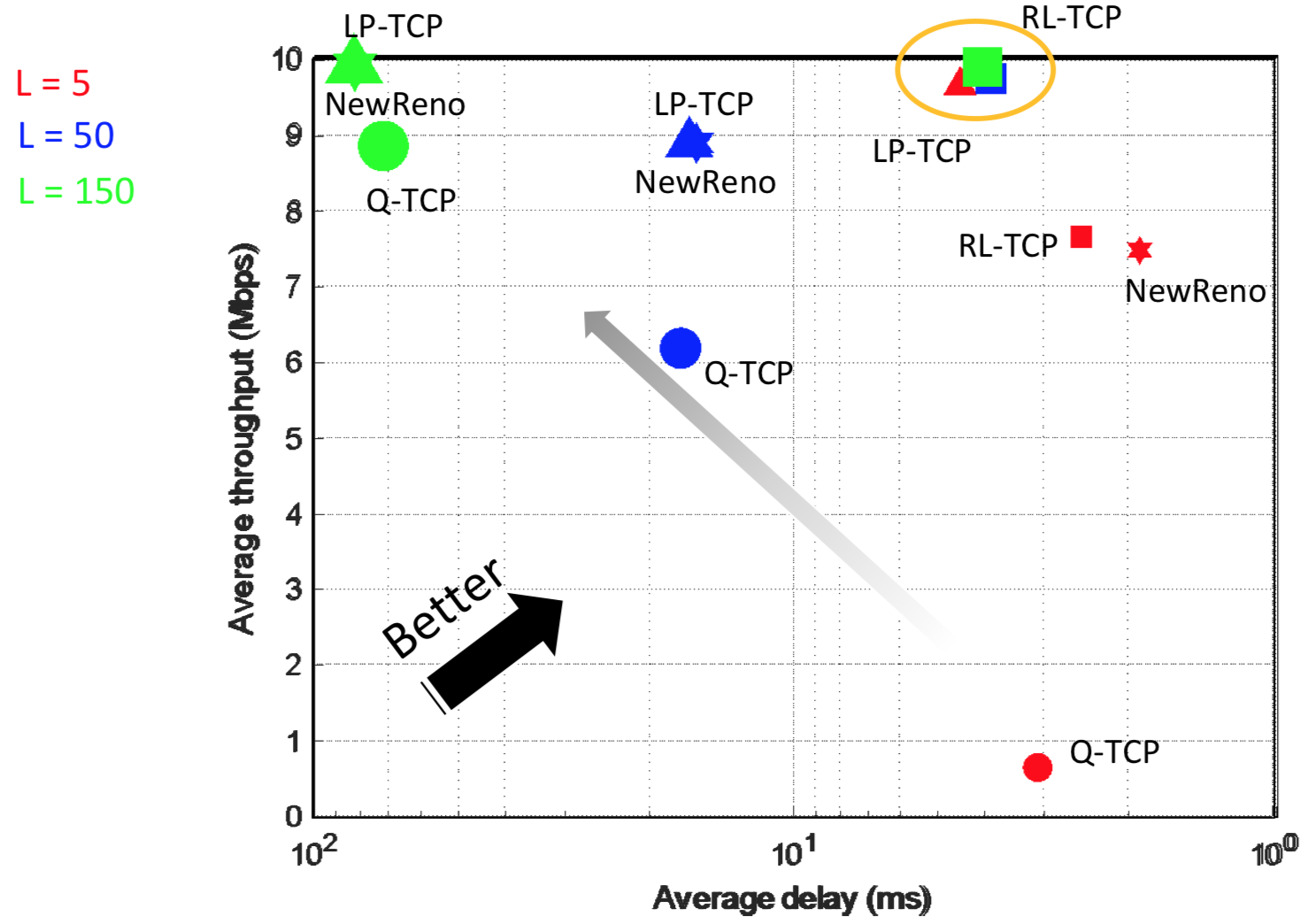

Single Sender - Varying buffer size

- LP-TCP has the best throughput (M_e) when L = 5

- RL-TCP has the best throughput (M_e) when L = 50, 150

- Performance of RL-TCP is less sensitive to the varying buffer size

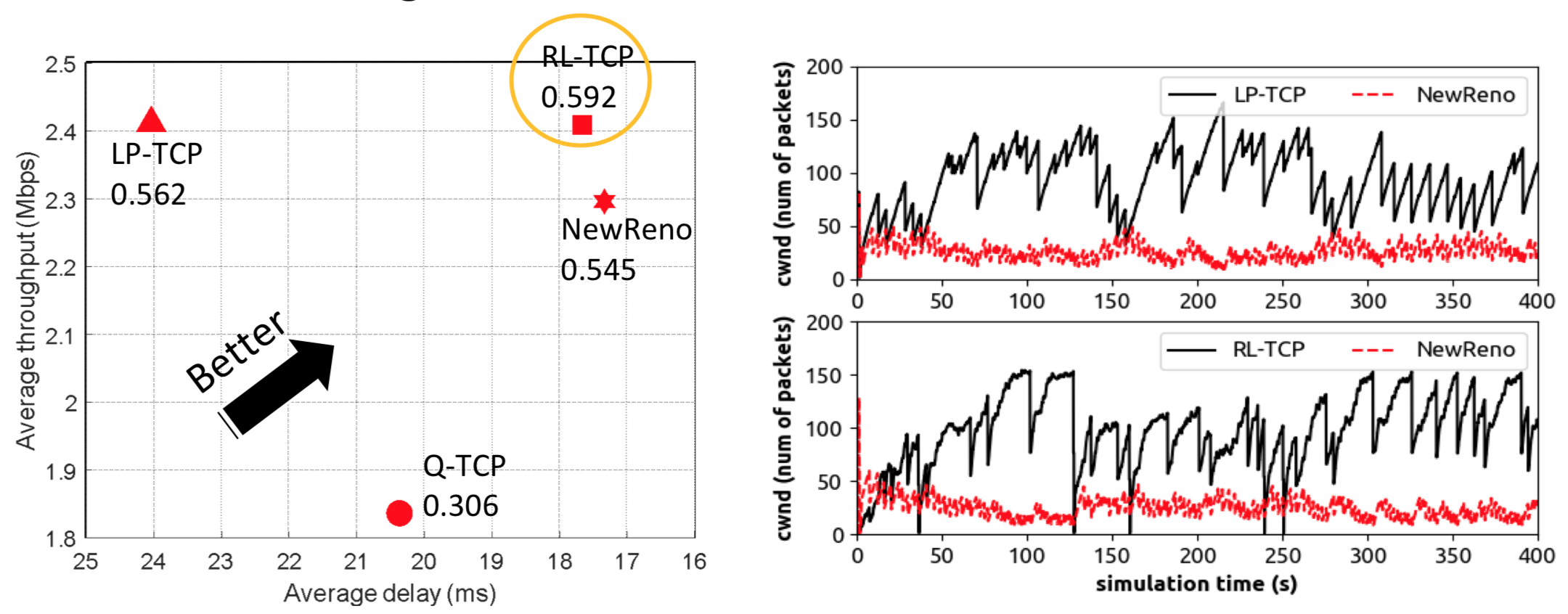

Multiple Senders

- Buffer size L = 50

- Left: 4 senders, homogeneous

- Right: 3 NewReno senders, 1 LP/RL-TCP

Future Work

- Explore policy-based RL-TCP

- Improve fairness for learning-based TCP congestion control schemes

Ref

- [1] TCP Vegas: End to end congestion avoidance on a global Internet

- [2] CUBIC: a new TCP-friendly high-speed TCP variant

- [3] A compound TCP approach for high-speed and long distance networks

- [4] TCP ex machina: computer-generated congestion control

- [5] Learning-based and data-driven tcp design for memory-constrained iot